Prof. Fábri: “Rankings are media phenomenon of the postmodern mass democracy.”

Mr. President, Dear Colleagues!

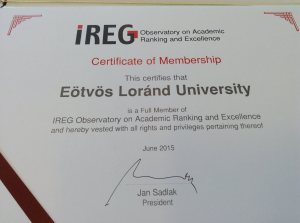

I welcome you in name of Hungary’s oldest and most competitivness (based on ranking positions too) research university.

It is a great honor to us that the we should be member of the International Ranking Expert Group – Observatory, one of the most important global organization of higher education. This membership offer for us a chance to learn about new viewpoints, methods, impacts of university rankings. I hope, you can also use results of our own researc h and experiments about the ranking-questions.

h and experiments about the ranking-questions.

My institution not only observes and evaluates it’s ranking positions, but also analyzes such results trough the work of our special research group established for this purpose. The most well-known Hungarian university ranking are also the achievement of this team’s work.

Our research on fields of social psychology, sociology, philosophy of sciences and social communication serve for us the theoretical background under which we interpret rankings and their effects on universities.

This approach goes back to the first institutional evaluation ranking publications on the turn of the 20th century, by psychologist James Cattell, editor of Science magazine. But, an international “race” of universities in a form of a ranking isn’t a new phenomenon: it has been a way of comparing higher education and scientific institutions ever since we can remember. The pioneer of such rankings was developed in 1863 by Carl Koristka, professor of the Prague Polytechnic Institution.

We obviously neither have the time nor want to debate over each and every methodological problem related to creating global higher education rankings, since conferences held these past five years, (mainly the ones organized by IREG in Shanghai, London, or Vienna just to name a few) as well as publications submitted in this topic have been dissected quite thoroughly. Bias stemming from a scientific perspective or from a certain language used, the use of indicators and weighing at one’s discretion, seem to be obvious factors in ranking related critiques in literature.

In our interpretation and context, rankings show society’s media centered nature and its ef fect on the university world. They are media phenomenon of the postmodern mass democracy. Because of the traditional authority of university and the scientific knowledge declined, and everything looks like countable with numbers, the media and the public opinion seem to be competent “voting” about higher education.

fect on the university world. They are media phenomenon of the postmodern mass democracy. Because of the traditional authority of university and the scientific knowledge declined, and everything looks like countable with numbers, the media and the public opinion seem to be competent “voting” about higher education.

● They are considered postmodern because scientific and instructional performance as well as social and economical influences are tangible things. They provide a self-explanatory setting for themselves in which we may interpret them.

● They predominate through mass-democracy, since everything is interpreted through plurality and cardinality.

● It is a media phenomenon because their power stems from journalists translating and interpreting the envied and hard to understand language and intellectual productivity of a higher education environment into an easily digestible, “instantly” available form.

But, there is a special problem for East-European region.

Nature magazine has recently published an instructive compilation on the fall of the Berlin Wall with regards to its quarter century anniversary. It is clear that the division of Europe in the field of science hasn’t decreased at all. We observe an stunningly one-sided distribution of ERC Grants scholarships, which is an initiative of great significance in the European Union. While scientists from the former socialist block won 74, Western scientists have received 3.014 altogether (the outstanding ratio of Hungarian recipients shouldn’t let us forget the inequality in these regions). The situation isn’t better with regards to supported research projects receiving funding. This recalls the validity of the Matthew effect, a world renowned thesis of Robert K. Merton: those people who already have a name and standing in the scientific community will encounter more opportunities compared to those who have nothing, therefore they will be left gradually more behind.

Of course, the main question lies rather in the globalization of scientific research, and our place in this global competition than in the rankings. To deal with the frustration stemming from this matter, we shouldn’t turn to rankings, we should turn to national science politics. We need increasing financial support to accelerate competition and performance, establishing autonomous scientific organizations that fully support academic values, and maintaining the appropriate levels of ethical values within the scientific community.

Universities and ranking developers should find solutions together on how it would be possible to provide a more realistic picture on the situation of regional universities as well as their competitiveness. But, generally, three new aspects should be introduced in the ranking-games:

● Recognizing the performance of universities on fields that aren’t visible in rankings, such as preparing educators, measuring the cultural and economical impacts of schools, and so on. These cannot be measured with the usual “ranking methodologies”: we need to initiate innovation in this topic. U-Multirank has been making an effort to initiate change but it is not yet clear to decide whether it will be used as a ranking tool as well. Let me bring up ELTE’s ranking position as an example. You see on the graph the indicators these university rankings are using. Since ELTE is mainly responsible for educating Hungarian teachers and instructors, social workers, as well as lawyers and legal professionals educated for living up to Hungarian national standards and regulations, these achievements barely appear in international publication lists or can barely be used to attract instructors and students from an international pool. Only 30 percent of ELTE’s scientific and scholarly work is considerent relevant based on the indicators. In other words, only 30 percent of ELTE’s work is actually visible on global rankings. It would be a huge mistake and an unforgivably treacherous act to underrate fields of expertise that don’t have an official ranking chart, including professors and students belonging to these fields.

● We are still teaching at universities. Users’ opinions who fund us, especially students’ and there families, should be a primary factor. Universities’ standards and quality are established in lecture halls and during seminars, and not by statistical numbers or indicators.

● The only thing that matters in the Olympic Games is who gets to the finish line first: this only ranks one’s speed, without attention on financial background or circumstances of the training. But, if a professor is able to achieve great results even with modest support, he or she probably has such an adaptive ability and resourcefulness that might become quite handy in education or in materializing research projects. Therefore, from the university rankings target groups’ point of view, we should bring funding and the topic of finances into our attention. The illustration shown above introduces us to a calculation on this matter.

Rankings will continuously evolve, and their relevance aren’t guaranteed enough by the Berlin Principles. Because of this, universities, especially institutions in worse positions, will only be able to move in rankings if they are able to establish a media communication channel without and within rankings.

But, as it was drew up at an earlier IREG-Conference: against sharp criticism, rankings stay with us. They will continuously evolve, and our common mission is to improve these for real social perception of universities and meritocratic autonomy of academic values both. So, universities and ranking developers should find solutions together on how it would be possible to provide a more realistic picture on the situation and excellence of universities.

Thank you for your attention, and I hope a usefully cooperation in the frame of IREG too!

Dr. habil György Fábri (1964) is an habilitated associate professor (Institute of research on Adult Education and Knowledge Management, Faculty of Education and Psychology of Eötvös Loránd University), head of the Social Communication Research Group. Areas of research: university philosophy, sociology of higher education and science, science communication, social communication, church sociology. His monograph was published on the transformation of Hungarian higher education during the change of regime (1992 Wien) and on university rankings (2017 Budapest). He has edited several scientific journals, and his university courses and publications cover communication theory, university philosophy, science communication, social representation, media and social philosophy, ethics, and church sociology.

Dr. habil György Fábri (1964) is an habilitated associate professor (Institute of research on Adult Education and Knowledge Management, Faculty of Education and Psychology of Eötvös Loránd University), head of the Social Communication Research Group. Areas of research: university philosophy, sociology of higher education and science, science communication, social communication, church sociology. His monograph was published on the transformation of Hungarian higher education during the change of regime (1992 Wien) and on university rankings (2017 Budapest). He has edited several scientific journals, and his university courses and publications cover communication theory, university philosophy, science communication, social representation, media and social philosophy, ethics, and church sociology.

Dr. Mircea Dumitru is a Professor of Philosophy at the University of Bucharest (since 2004). Rector of the University of Bucharest (since 2011). President of the European Society of Analytic Philosophy (2011 – 2014). Corresponding Fellow of the Romanian Academy (since 2014). Minister of Education and Scientific Research (July 2016 – January 2017). Visiting Professor at Beijing Normal University (2017 – 2022). President of the International Institute of Philosophy (2017 – 2020). President of Balkan Universities Association (2019 – 2020). He holds a PhD in Philosophy at Tulane University, New Orleans, USA (1998) with a topic in modal logic and philosophy of mathematics, and another PhD in Philosophy at the University of Bucharest (1998) with a topic in philosophy of language. Invited Professor at Tulsa University (USA), CUNY (USA), NYU (USA), Lyon 3, ENS Lyon, University of Helsinki, CUPL (Beijing, China), Pekin University (Beijing, China). Main area of research: philosophical logic, metaphysics, and philosophy of language. Main publications: Modality and Incompleteness (UMI, Ann Arbor, 1998); Modalitate si incompletitudine, (Paideia Publishing House, 2001, in Romanian; the book received the Mircea Florian Prize of the Romanian Academy); Logic and Philosophical Explorations (Humanitas, Bucharest, 2004, in Romanian); Words, Theories, and Things. Quine in Focus (ed.) (Pelican, 2009); Truth (ed.) (Bucharest University Publishing House, 2013); article on the Philosophy of Kit Fine, in The Cambridge Dictionary of Philosophy, the Third Edition, Robert Audi (ed.) (Cambridge University Press, 2015), Metaphysics, Meaning, and Modality. Themes from Kit Fine (ed.) (Oxford University Press, forthcoming).

Dr. Mircea Dumitru is a Professor of Philosophy at the University of Bucharest (since 2004). Rector of the University of Bucharest (since 2011). President of the European Society of Analytic Philosophy (2011 – 2014). Corresponding Fellow of the Romanian Academy (since 2014). Minister of Education and Scientific Research (July 2016 – January 2017). Visiting Professor at Beijing Normal University (2017 – 2022). President of the International Institute of Philosophy (2017 – 2020). President of Balkan Universities Association (2019 – 2020). He holds a PhD in Philosophy at Tulane University, New Orleans, USA (1998) with a topic in modal logic and philosophy of mathematics, and another PhD in Philosophy at the University of Bucharest (1998) with a topic in philosophy of language. Invited Professor at Tulsa University (USA), CUNY (USA), NYU (USA), Lyon 3, ENS Lyon, University of Helsinki, CUPL (Beijing, China), Pekin University (Beijing, China). Main area of research: philosophical logic, metaphysics, and philosophy of language. Main publications: Modality and Incompleteness (UMI, Ann Arbor, 1998); Modalitate si incompletitudine, (Paideia Publishing House, 2001, in Romanian; the book received the Mircea Florian Prize of the Romanian Academy); Logic and Philosophical Explorations (Humanitas, Bucharest, 2004, in Romanian); Words, Theories, and Things. Quine in Focus (ed.) (Pelican, 2009); Truth (ed.) (Bucharest University Publishing House, 2013); article on the Philosophy of Kit Fine, in The Cambridge Dictionary of Philosophy, the Third Edition, Robert Audi (ed.) (Cambridge University Press, 2015), Metaphysics, Meaning, and Modality. Themes from Kit Fine (ed.) (Oxford University Press, forthcoming). Mr. Degli Esposti is Full Professor at the Department of Computer Science and Engineering, Deputy Rector Alma Mater Studiorum Università di Bologna, Dean of Biblioteca Universitaria di Bologna, Head of Service for the health and safety of people in the workplace, President of the Alma Mater Foundation and Delegate for Rankings.

Mr. Degli Esposti is Full Professor at the Department of Computer Science and Engineering, Deputy Rector Alma Mater Studiorum Università di Bologna, Dean of Biblioteca Universitaria di Bologna, Head of Service for the health and safety of people in the workplace, President of the Alma Mater Foundation and Delegate for Rankings.

Ben joined QS in 2002 and has led institutional performance insights function of QS since its emergence following the early success of the QS World University Rankings®. His team is, today, responsible for the operational management of all major QS research projects including the QS World University Rankings® and variants by region and subject. Comprising over 60 people in five international locations, the team also operate a widely adopted university rating system – QS Stars – and a range of commissioned business intelligence and strategic advisory services.Ben has travelled to over 50 countries and spoken on his research in almost 40. He has personally visited over 50 of the world’s top 100 universities amongst countless others and is a regular and sought after speaker on the conference circuit.Ben is married and has two sons; if he had any free time it would be spent reading, watching movies and skiing.

Ben joined QS in 2002 and has led institutional performance insights function of QS since its emergence following the early success of the QS World University Rankings®. His team is, today, responsible for the operational management of all major QS research projects including the QS World University Rankings® and variants by region and subject. Comprising over 60 people in five international locations, the team also operate a widely adopted university rating system – QS Stars – and a range of commissioned business intelligence and strategic advisory services.Ben has travelled to over 50 countries and spoken on his research in almost 40. He has personally visited over 50 of the world’s top 100 universities amongst countless others and is a regular and sought after speaker on the conference circuit.Ben is married and has two sons; if he had any free time it would be spent reading, watching movies and skiing.

Anna Urbanovics is a PhD student at Doctoral School of Public Administration Sciences of the University of Public Service, and studies Sociology Master of Arts at the Corvinus University of Budapest. She is graduated in International Security Studies Master of Arts at the University of Public Service. She does research in Scientometrics and International Relations.

Anna Urbanovics is a PhD student at Doctoral School of Public Administration Sciences of the University of Public Service, and studies Sociology Master of Arts at the Corvinus University of Budapest. She is graduated in International Security Studies Master of Arts at the University of Public Service. She does research in Scientometrics and International Relations.

Since 1 February 2019 Minister Palkovics as Government Commissioner has been responsible for the coordination of the tasks prescribed in Act XXIV of 2016 on the promulgation of the Agreement between the Government of Hungary and the Government of the People’s Republic of China on the development, implementation and financing of the Hungarian section of the Budapest-Belgrade Railway Reconstruction Project.

Since 1 February 2019 Minister Palkovics as Government Commissioner has been responsible for the coordination of the tasks prescribed in Act XXIV of 2016 on the promulgation of the Agreement between the Government of Hungary and the Government of the People’s Republic of China on the development, implementation and financing of the Hungarian section of the Budapest-Belgrade Railway Reconstruction Project.

He is the past President of the Health and Health Care Economics Section of the Hungarian Economics Association.

He is the past President of the Health and Health Care Economics Section of the Hungarian Economics Association.

Based in Berlin, Zuzanna Gorenstein is Head of Project of the German Rectors’ Conference (HRK) service project “International University Rankings” since 2019. Her work at HRK encompasses the conceptual development and implementation of targeted advisory, networking, and communication measures for German universities’ ranking officers. Before joining the HRK, Zuzanna Gorenstein herself served as ranking officer of Freie Universität Berlin.

Based in Berlin, Zuzanna Gorenstein is Head of Project of the German Rectors’ Conference (HRK) service project “International University Rankings” since 2019. Her work at HRK encompasses the conceptual development and implementation of targeted advisory, networking, and communication measures for German universities’ ranking officers. Before joining the HRK, Zuzanna Gorenstein herself served as ranking officer of Freie Universität Berlin.

His books on mathematical modeling of chemical, biological, and other complex systems have been published by Princeton University Press, MIT Press, Springer Publishing house. His new book RANKING: The Unwritten Rules of the Social Game We All Play was published recently by the Oxford University Press, and is already under translation for several languages.

His books on mathematical modeling of chemical, biological, and other complex systems have been published by Princeton University Press, MIT Press, Springer Publishing house. His new book RANKING: The Unwritten Rules of the Social Game We All Play was published recently by the Oxford University Press, and is already under translation for several languages.